Introduction:

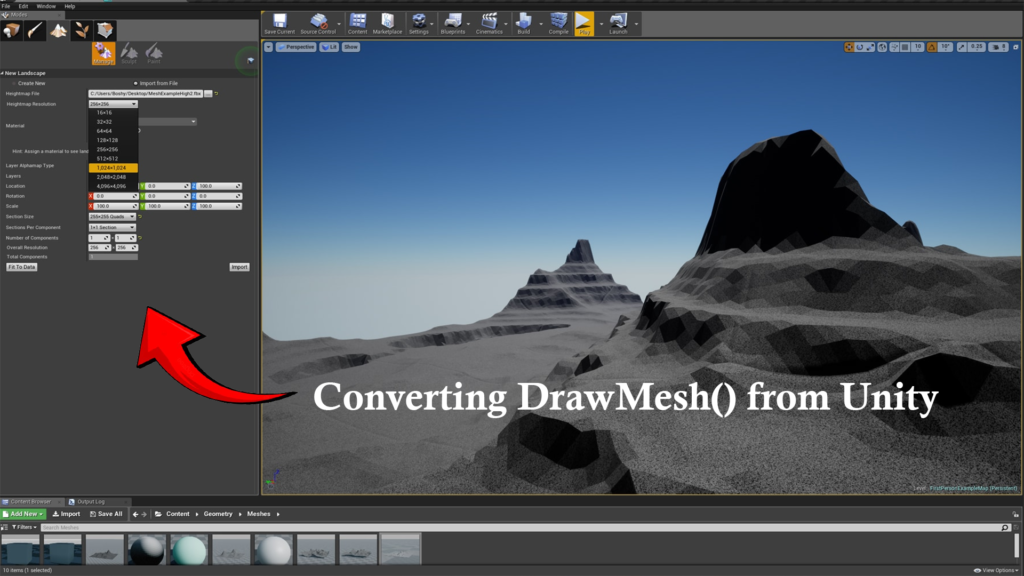

Virtual Reality (VR) effect in Unreal Engine 4.25 delivers a strong medium for building immersive adventures. Yet, transitioning from Unity to Unreal Engine can offer challenges, particularly when adjusting specific parts. One typical study in VR is holding the camera work, as this now involves the player’s knowledge of the virtual globe. If you’re driving from Unity’s DrawMesh() functionality to Unreal Engine, comprehending how to correctly handle camera positioning in VR is key.

In this report, we’ll show you how to set the camera work in VR within Unreal Engine 4.25, along with a quick summary of how to restore Unity’s DrawMesh() to Unreal’s rendering method. We’ll wrap everything from configuring the camera for VR to making sure your meshes are removed at the right places in a virtual setting.

Why Camera Work is Essential in VR

In VR, the camera’s work means the party’s view. This is especially crucial for developing natural and immersive adventures. If the camera is misaligned or poorly placed, the party may feel disoriented or painful. Correctly charging the camera function allows the player can interact with the virtual world inherently and comfortably.

In Unreal Engine 4.25, VR product is facilitated with the use of the VR template, which automatically configures key settings like the camera, player controller, and VR input. Yet, if you’re transitioning from Unity to Unreal and need to take business camera positioning in VR (such as when converting DrawMesh() functionality from Unity), you may be required to fine-tune some locations.

How to Set Camera Work in VR in Unreal Motor 4.25

Before plunging into how this links to Unity’s DrawMesh(), let’s rather obscure how to set up the VR camera work in Unreal Engine 4.25.

Step 1: Set Up a VR Project

To begin operating with VR in Unreal Engine 4.25, make sure you’re utilizing the right template and sets.

- Start a new task: When starting a new scheme in Unreal Engine, like the Virtual Reality (VR) template to provide all required VR features are already set up for you.

- Put up your VR hardware: Confirm that your VR hardware (like Oculus Rift, HTC Vive, or Windows Mixed Truth) is secured and properly configured with Unreal Engine.

- Boost VR plugins: Go to Correction > Plugins, then allow the required VR plugins for your hardware (e.g., Oculus VR, SteamVR).

Step 2: Access the VR Camera in Unreal Engine

In Unreal Engine, the camera utilized in VR is generally set up via a Pawn or Nature class. The camera is connected to the VR headset and should observe the actions of the party’s head. The camera’s work will vary based on the party’s campaign in the virtual globe.

Here’s how to access and change the camera work:

- See the VR Pawn or Personality: In your VR project, you probably have a business Pawn or Qualities that contains the participant’s activity..

- Find the Camera Feature: Inside the Pawn or Nature class, find the CameraComponent that’s liable for the VR camera. This camera will track the activities of the VR headset.

- Change Camera Work: You can change the camera’s work programmatically via Blueprint or C++. For example, you can cancel the camera’s status based on user input or predefined locations.

Illustration in C++ for changing camera work:

FVector CameraOffset(0.f, 0.f, 180.f);

CameraComponent->SetRelativeLocation(CameraOffset);

This choice moves the camera to a more elevated place, forging a fixed user in VR.

Step 3: Camera Position Established on VR Input

To create the camera work dynamic, you can connect the camera’s job to the VR headset’s place in the world. Unreal Engine runs this automatically for most VR forms, but you can change it based on exact requirements.

For instance, if you’re making a first-person VR adventure, you might want the camera to follow the party’s head moves accurately. You can utilize VR Tracking data to get the part of the participant’s head and correct the camera’s place in real time.

Example in C++:

FVector PlayerHeadLocation = VRDevice->GetHeadPosition();

CameraComponent->SetWorldLocation(PlayerHeadLocation);

This code snippet provides that the camera tracks the head moves in VR, holding the player’s view accurately.

Restoring Unity’s DrawMesh() to Simulated Engine

Unity’s DrawMesh() process lets designers generate meshes at a distinct place in the scene, with customizable change, fabric, and other effects. Yet, Unreal Engine operates a bit differently. Mock’s rendering pipeline needs a more structured process to take mesh rendering.

When restoring Unity’s DrawMesh() to Unreal, you will generally operate StaticMeshComponents or ProceduralMeshComponent for business meshes. If you’re selling with VR, you’ll need to make certain that meshes are placed precisely close to the VR camera, particularly since the camera’s role varies as the user progresses.

Model: Generating a Mesh in Unreal Engine Founded on Camera Work

To copy Unity’s DrawMesh() conduct in Unreal, you would utilize the StaticMeshComponent to generate a mesh at a precise spot. Here’s an illustration of how to do this:

void AMyActor::RenderMeshAtCameraPosition()

{

FVector CameraLocation = CameraComponent->GetComponentLocation();

UStaticMeshComponent* MeshComp = NewObject<UStaticMeshComponent>(this);

MeshComp->SetStaticMesh(MyMesh);

MeshComp->SetWorldLocation(CameraLocation + FVector(0, 0, 200));

MeshComp->AttachToComponent(RootComponent, FAttachmentTransformRules::KeepRelativeTransform);

MeshComp->RegisterComponent();

}

In this illustration:

- We recover the camera’s existing work utilizing GetComponentLocation().

- Then, we place the mesh just above the camera’s work (+ FVector(0, 0, 200)), providing it’s outlined in the user’s area of thought.

Determination

Putting the camera work in Unreal Engine 4.25 for VR is clear, but it’s essential to comprehend how Unreal holds camera movements and mesh rendering. When transitioning from Unity to Unreal, adjusting business processes like DrawMesh() affects learning Unreal’s generating channel, which uses elements like StaticMeshComponent and ProceduralMeshComponent for active mesh arrangement.